F2070X Infrastructure Processing Unit (IPU)

2×10/25G, 8×10/25G, 2x100G

Data Sheet

Powerful Intel®-based Infrastructure Processing Unit (IPU)

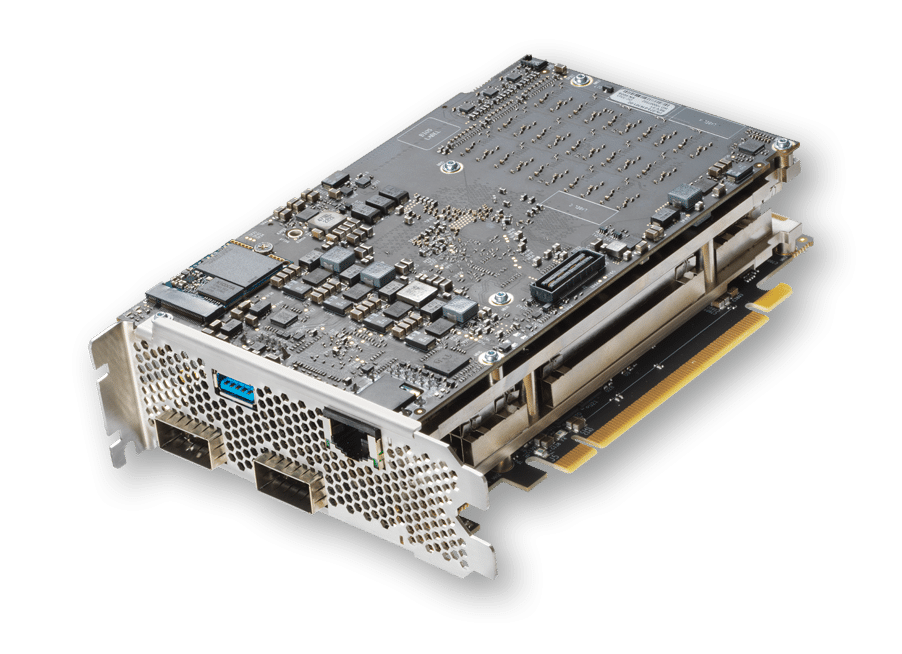

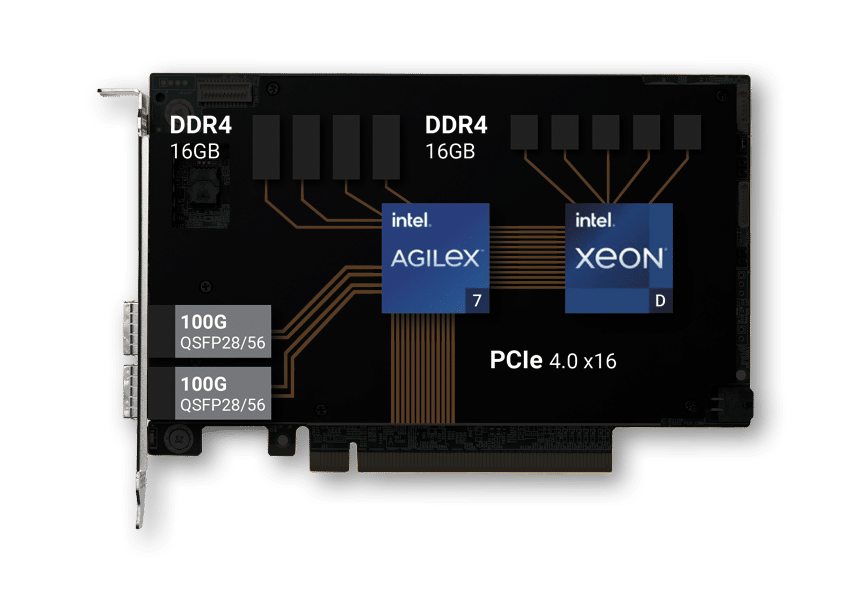

The Napatech F2070X Infrastructure Processing Unit (IPU) is a 2x100Gbe PCIe card with an Intel® Agilex® AGFC023 FPGA and an Intel® Xeon® D SoC. The unique combination of FPGA and full-fledged Xeon CPU on a PCI card allows for unique offload capabilities. Coupled with Napatech software, the F2070X is the perfect solution for network, storage and security offload and acceleration. It enables virtualized cloud, cloud-native or bare-metal server virtualization with tenant isolation.

Customization on demand

The F2070X uniquely offers both programmable hardware and software, to tailor the IPU to the most demanding and specific needs in your network, and to modify and enhance its capabilities over the life of the deployment. It is based on the Intel Application Stack Acceleration Framework (ASAF) that supports the integration of software and IP from Intel, Napatech, 3rd parties and homegrown solutions. This one of a kind architecture enables hardware performance and the speed of software innovation.

Scalable platform

The Napatech F2070X comes in a standard configuration, and includes support for several combinations of Intel® FPGAs, Xeon® D processors, and memory. This enables tailored platform configurations matching requirements for specific use cases.

SOLUTION HIGHLIGHTS

FPGA Device and Memory

- Intel Agilex AGFC023

- 2.3M LEs, 782.4K ALMs, 10.4K M20Ks

- Hardened crypto

- 4×4 GB DDR4 (ECC, 40b, 2666MT)

SoC Processor and Memory

- Intel Xeon D 1736 SoC

- 8 Cores, 16 Threads

- 2.3GHz, 3.4 GHz Turbo Freq.

- 15 MB Cache

- 2×8 GB DDR4 (ECC, 72b, 2933MT)

- Up to 2 TB in M.2 NVMe x4 (2230/2242) slot for Operating System and applications

PCI Express Interfaces

- PCIe Gen 4.0 x16 (16 GT/s) to the host

- PCIe Gen 4.0 x16 (16 GT/s) between the FPGA and SoC

Front Panel Network Interfaces

- 2-ports QSFP28/56

- 2x100GBASE-LR4/SR4/CR4

- 2×10/25GBASE-LR/SR/CR (QSFP/SFP adapter)

- 8×10/25GBASE-SR/CR (breakout cable)

- Dedicated RJ45 management port

Supported Compute and Memory Devices (Mount Options)

- FPGA: Agilex AGFC022 or AGFC027

- SoC: All Intel Xeon D 1700-Series CPUs

- FPGA Memory

- 3×4 GB DDR4 (ECC, 40b, 3200 MT) + 1×8 GB DDR4 (ECC 72b 3200 MT)

- SoC Memory

- 3×8 GB DDR4 (ECC, 72b, 2900MT)

- 3×16 GB DDR4 (ECC, 72b, 2900MT)

Size

- Full-height, half-length, dual-slot PCI form factor

Power and Cooling

- Passive cooling

- Max power consumption for standard HW configuration: 150W

- Max power dissipation supported by platform: 250W

Time Synchronization (Mount Options)

- Dedicated PTP RJ45 Port

- External SMA-F Connector (PPS/10MHz I/O)

- Internal MCX-F Connector (PPS/10MHz I/O)

- Stratum 3 TCXO or Stratum 3e OCXO

- IEEE1588v2 Support

Board Management

- Ethernet, USB (Front panel), UART (Internal) connectivity

- Secure FPGA image update

- Wake-on-LAN

- MCTP over SMBus

- Dedicated NC-SI RBT internal port

- PLDM for Monitor and Control (DSP0248)

- PLDM for FRU (DSP0257)

SoC Processor Operating System

- Fedora Linux 40 (Kernel version 6.11.x)

- UEFI BIOS

- PXE Boot support

- Full shell access via SSH and UART

Supported Host Processor Operating Systems

- Fedora Linux 40 (kernel version 6.11.x)

- Rocky Linux 9.4 and 9.5 (kernel version 5.14.x)

Environment and Approvals

- EU, US, APCJ Regulatory approvals

- Thermal, Shock, Vibration tested

- UL Marked, RoHS, REACH

- Temperature range: -5 to +45 deg. C.

- ASHRAE class A2

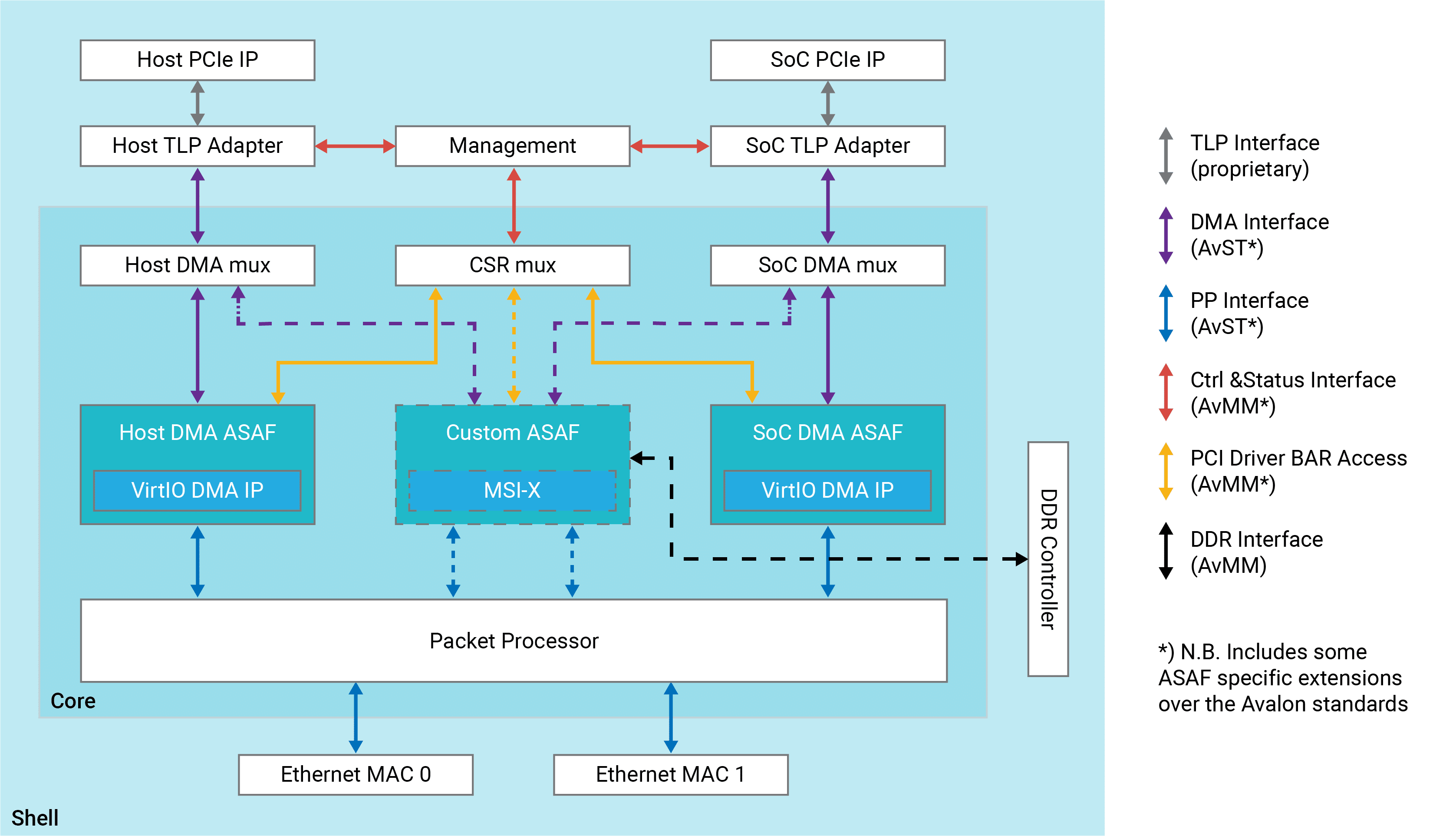

Application Stack Acceleration Framework (ASAF)

- Framework for embedding customer Accelerator Functional Units (AFU) implementing workload acceleration/offload in FPGA

- 6 AFUs supported

- Throughput up to 200Gbps

- Look-aside and inline AFU configurations

- Pre-integrated AFUs for Host virtio-net DMA, SoC virtio-net DMA and packet processor w. fundamental NIC functions

ASAF Framework

Open vSwitch (OVS) Offload

- 2x100G connectivity to network

- VIRTIO Ports for the Host side and SOC side

- Up to 64 virtual ports for OVS output

- 4 million entries for each fast path

- Maximum 4K entries for Default Lookup + wildcard respectively

- 4 million connection tracking for each fast path

- 11 stages of parsing

- Outer Header L1: Source Ethernet port, Source VF Port

- Outer Header L2: DMAC, SMAC, EtherType, Outer most VLAN

- Outer Header L3: Source IP (Ipv4 and Ipv6), Destination IP (Ipv4 and Ipv6), Protocol

- Outer Header L4: Destination Port, Source Port, Tcp Flags

- Inner Header: VxLAN, Geneve

- Inner L2: DMAC; SMAC, EtherType, Outer most VLAN

- Inner L3: Source IP (Ipv4 and Ipv6), Destination IP (Ipv4 and Ipv6), Protocol

- Inner L4: Destination Port, Source Port, Tcp Flags

- Basic action commands

- Drop

- Forward to port

- Forward to VF port

- Meter Index

- Stats count

- Modify action commands

- Modify, Strip, or Insert VLAN

- Strip-N-Forward

- Insert-N-Set

- Tunnel and NAT Action commands

- Encap VxLAN/Geneve

- Decap VxLAN/Geneve

- NAT L2

- NAT L2/L3

- NAT L2/L3/L4

Storage Offload

- 2x100G connectivity to the storage network

- NVMeOF TCP offload

- Presents 16 Block devices to the Host (Virtio-Blk)

- Compatible with the VirtIO-Block drivers present in the latest RHEL and Ubuntu Linux distributions

- No proprietary software and drivers required in the Host

- No network interfaces exposed to the Host

- NVMe/TCP initiator running on the SoC

- Offloads all NVMe/TCP operations from the host CPU to the IPU

- No access to the SoC from the Host (Airgap)

- Storage configuration over SPDK RPC interface

- NVMe/TCP Multipath support

Security Offload

- 2x100G connectivity to network

- TCP+TLS offload

- Present up to 16 network devices to the Host (Virtio-net)

- TLS 1.2/1.3 encryption offload

- Openssl support

- Nginx based HTTP(s) reverse proxy with caching

- WebSockets support

- Load balancing to host

- Web server acceleration (reduced page load time) with static file caching and image optimization

Orderable products

| Product | Data Rate |

| F2070X-2×100 | 2×100 Gbps |