As Communications Service Providers (CSPs) worldwide scale up the deployments of their 5G networks, they face strong pressure to optimize their Return on Investment (RoI), given the massive expenses they already incurred to acquire spectrum as well as the ongoing costs of infrastructure rollouts.

The road to FPGA-based reconfigurable computing

FPGA technology has traditionally provided a dedicated functionality in use cases where reduced volume hasn’t justified the deployment of ASSP technology, or where its reprogrammable nature serves a purpose. Some key events that have taken place recently within the FPGA scene have, however, brought a huge focus on a new high-volume use case for deployment of FPGA technology in general compute platforms, often referred to as reconfigurable computing platforms. Following are the events that sparked this new wave:

- Intel’s acquisition of Altera in 2015, one of the two dominant providers of FPGA technology.

- Microsoft’s launch at Ignite 2016 of the Catapult v2 FPGA based platform in datacenter servers supporting the Azure framework

- The launch of the FPGA based F1 instance in the Amazon AWS EC2 framework in early 2017

Intel, Microsoft, and Amazon represent different parts of the value chain. Intel, as the device vendor, seems to have come to a point in the CPU life cycle where two different ways of squeezing more server performance from a CPU has been exhausted:

- First event: the frequency race – where the clock frequency was increased with each new CPU process node.

- Second event: the multi-core race – where the number of cores is increased with each new CPU process node.

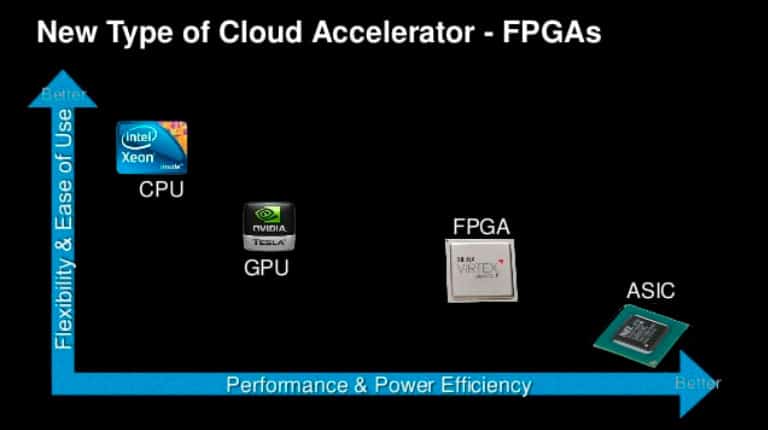

Intel’s acquisition of Altera suggests that FPGA technology will fuel the next server performance race now with the CPU as the ‘conductor’ and with the FPGA as the ‘workhorse’, executing/accelerating applicable functions. Figure 1. documents the performance benefits of the FPGA over the CPU sourcing this trend.

- High device cost

- Complicated description interface

- Challenging thermal environment

Cost

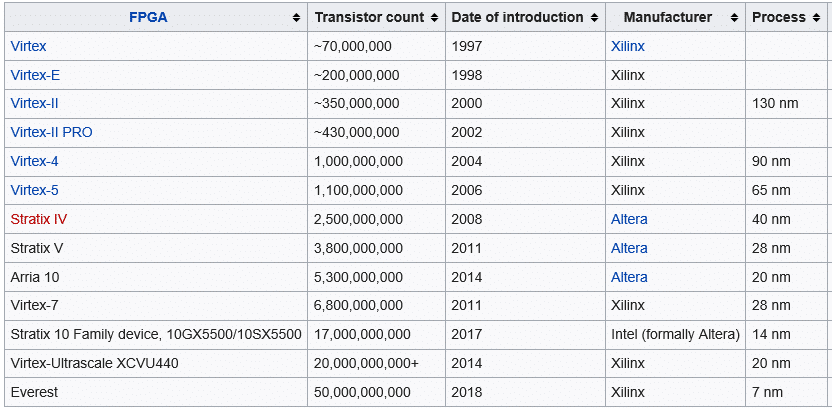

FPGA technology has historically had a significant price tag attached as it represents state of the art chip technology, deploying the latest process node as the mean to maximize the number of logical resources available for the demanding use cases. Figure 2. documents this trend by listing the transistor count for key FPGA device families over the last 2 decades.

The reconfigurable computing platform use case calls for a completely different price strategy for the high-end FPGA devices. This device type was previously solely premium priced, funding the expensive mask sets for the silicon manufacturing process and the R&D activities invested in the toolchain – and device development. The two dominant providers of FPGA technology, Intel and Xilinx, are currently struggling to support the new reconfigurable computing platform use case with the needed low-cost pricing, without jeopardizing revenue from the current niche applications. This is a ‘chicken and egg’ situation that I am sure will find a resolution once the big volumes promised by the new use case materializes.

Description Interface

The bulk part of FPGA functionality in current dedicated use cases are typically described through low-level Hardware Description Languages (HDL) like Verilog or VHDL, and compiled, verified, validated and ‘place and routed’ in complex tool environments. Both the HDLs and the toolchains require niche competences with a high entry barrier knowledge-wise. In order for the FPGA-based reconfigurable computing platform to obtain the expected industry momentum, this burden needs to be resolved/relaxed. The end goal could be to enable complete transparency to the FPGA technology through a relevant abstraction layer, offloading CPU resources through profiling of the performance bottlenecks in the C-code through seamless porting to FPGA targets. The world is not quite there yet but a lot of concurrent initiatives including OpenCL, SystemC, sdxl & P4, is moving us in that direction, which is why this is an obstacle that can be overcome.

Thermal Challenge

While current dedicated FPGA applications have a “known” power dissipation profile enabling a complementary thermal design, the FPGA-based reconfigurable computing platform is faced with an ‘unknown’ power dissipation profile caused by its reconfigurable nature. This challenges the use case with the need for an “unknown” sized cooling solution. Over time, FPGA devices are expected to become an integral part of the server motherboard and therefore, likely to benefit from similar exhaustive cooling solutions as the current CPU sockets. Until then, and probably in parallel with, the bulk part of the reconfigurable computing FPGA sockets is expected to be add-in boards, typically PCI-based. In this environment, the physical size of the cooling solution allowed for by the PCI form-factor and neighboring add-in cards will be the limiting factor for the performance of the reconfigurable computing platform rather than the acceleration performance of the FPGA itself. This fact calls for graceful handling of FPGA overtemperature events like the reduction in clock frequency of CPUs running hot. While control through the clock frequency reduction seems like the obvious/only way to control CPUs from running hot, more differentiated control seems applicable when limiting FPGAs running hot in order to meet use case expectations.

Napatech has, since its founding in 2003, been true to the original business plan of becoming the leading OEM provider of FPGA-based PCI add-in boards, complemented by an exhaustive configurable networking-focused feature set. We haven’t been successful with this goal without taking complete responsibility for all hierarchy levels in the integration process between the FPGA-based add-in board and the server environment, ranging from the lowest physical levels like the power and thermal environment, all the way to the architecture of the server and deployed CPUs. With an installed base of over a 100 thousand FPGA-based PCI add-in boards working in random pick servers and random pick slots, it is obvious that Napatech is well positioned for the age of FPGA-based reconfigurable computing.