As Communications Service Providers (CSPs) worldwide scale up the deployments of their 5G networks, they face strong pressure to optimize their Return on Investment (RoI), given the massive expenses they already incurred to acquire spectrum as well as the ongoing costs of infrastructure rollouts.

Light at the end of the tunnel – OVS offload shows 6x performance improvement

From partial to full OVS offload

Napatech has been working on Open vSwitch (OVS) hardware acceleration/offload via DPDK for a while now. It started with the Flow Hardware Offload, which has been available in OVS since 2.10. This introduced a partial offload of megaflows, where packets were instrumented with metadata aiding OVS to bypass its cache look-ups and forward the packets directly to the destination. See Red Hat blog for details.

Lately, Napatech has been working on a full OVS offload implementation, in continuation of the partial offload already upstreamed. This is a data-plane offload not to be confused with bare metal offload; the control-plane and slow path in this offload method still residing in the host. The same approach of offloading megaflows is applied – the new stuff is that the VirtQueues are no longer handled by OVS but by the SmartNIC’s Poll Mode Driver (PMD) directly. Packets that can be handled by the SmartNIC fast path never end up in OVS for either ingress or egress, hence OVS is fully offloaded.

6x performance improvement

In order to fully offload the fast path, the OVS actions must also be performed in the SmartNIC – which is achievable as Napatech has recently introduced support for VXLAN and VLAN offload. The VXLAN and VLAN offload combined with the full offload of OVS show up to a ~6x performance improvement compared to a basic OVS+DPDK solution running on a standard NIC.

VXLAN performance

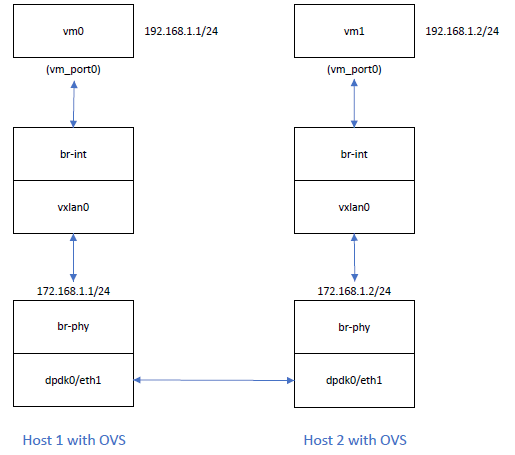

The ~6x performance improvement was tested using the following setup:

- The test setup consists of two servers each running OVS.

- Server 1 runs a program that receives packets, swaps the MAC/IP and retransmits the packet.

- Server 2 runs a traffic generator.

- A VXLAN tunnel is established between the two servers.

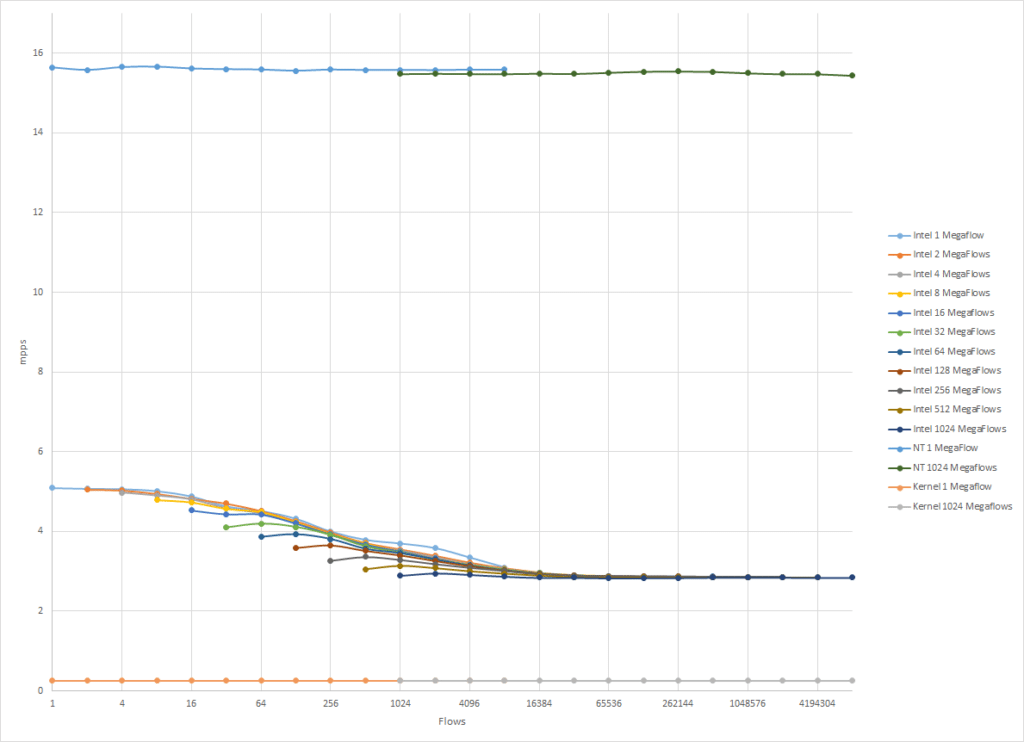

Performance is measured by creating an increasing number of megaflows (ovs-ofctl unique flows) and an increasing number of unique flows within each megaflow.

An example configuration would be:

#!/bin/bash

ovs-ofctl del-flows br-int

for port in {$1}

do

ovs-ofctl add-flow br-int check_overlap,in_port=1,ip,udp,tp_dst=$port,actions=output:2

done

ovs-ofctl add-flow br-int in_port=2,actions=output:1

Traffic will be generated by changing the UDP source and destination port, for each destination (megaflow) there will be 1 or more source ports. The test setup will stress both the exact match cache and the megaflow cache of OVS.

Please also see the Napatech OVS demo for more details on the performance improvements.

Napatech is working to get the VXLAN offload upstreamed, so stay tuned.